Two years is a very long time in the world of AI. And during that very long time, the consensus about the true nemesis of OpenAI has shifted several times. First, we all believed Anthropic was the true rival of OpenAI. It is understandable. Anthropic was founded by former members of OpenAI’s technical or leadership teams, reportedly over a disagreement about AI safety. Anthropic was thus the AI safe alternative to OpenAI. Then, with the release of Claude Sonnet-3.5, it became the company with the best coding model.

After Anthropic, Mistral briefly emerged as a strong contender due to its focus on open-source models, which contrasted with the proprietary approach of OpenAI and Anthropic. However, Mistral’s impact diminished as they shifted their strategy to more proprietary models, likely for financial reasons. Anthropic came back on top as the main OpenAI’s alternative soon after, with the release of Claude Sonnet-3.5 and artifacts. It also captured significant mindshare by pushing innovative concepts like computer useand the model control protocol. At the same time, OpenAI kept its lead with the release of powerful reasoning models like o1.

And now we have, as I wrote a few weeks back, a new big competitor: DeepSeek.

DeepSeek Enters the Chat

DeepSeek has rapidly risen to the forefront of AI with the launch of DeepSeek-V3, an open-source model that outperforms GPT-4o and Claude 3.5 Sonnet. This achievement is even more remarkable considering it was developed at a fraction of the cost (reportedly less than $6 million).

My first encounter with DeepSeek was through their coding model, DeepSeek-Coder-V2, available in the Nebius AI Studio. This open-source model impressed me with its quality and accessibility. It’s straightforward to set up and can be fine-tuned for specific needs.

Let’s say you want to finetune a coding LLM so it excels on questions related to your proprietary codebase. The first step would be to get all the code documentation available. This includes API references, internal developer guides, inline code comments, and any architectural diagrams that provide insights into the system.

Next, you should collect a dataset of relevant code snippets, bug reports, and past developer interactions that can help the model understand real-world usage patterns. Cleaning and structuring this data is essential to remove redundant or misleading information.

Once the data is prepared, you can fine-tune an open-weight model like DeepSeek-Coder-V2 or even V3 using techniques like LoRA (Low-Rank Adaptation) to efficiently adapt the base model to your specific coding style and architecture. Hosting the fine-tuned model on a cloud platform like Nebius AI Studio allows for easy deployment and integration into your development workflow.

R1 vs. o1: The Battle of the Reasoning Models

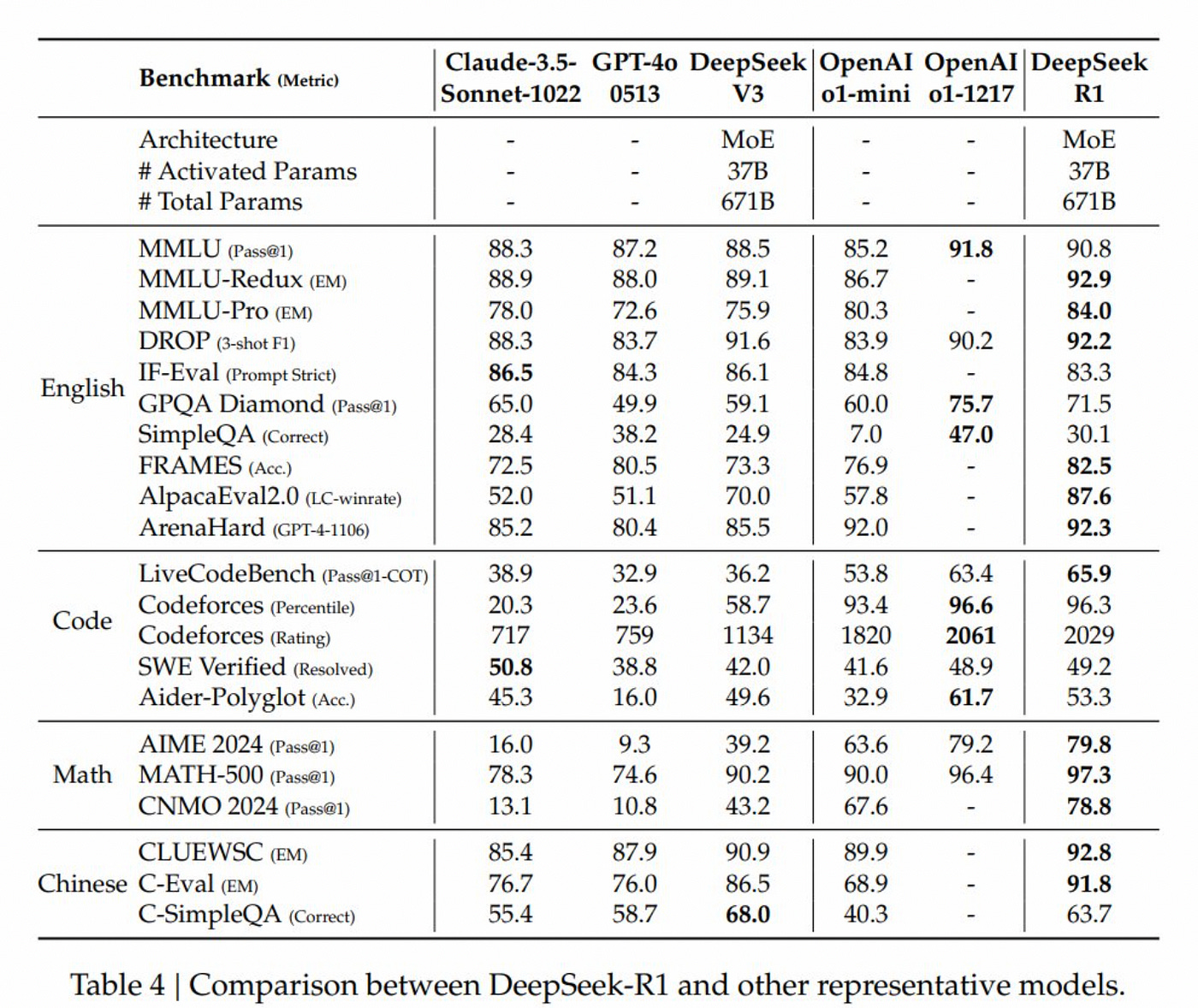

With the release of DeepSeek-R1, DeepSeek has matched OpenAI’s large reasoning model, o1, but at a fraction of the cost. We just collectively realized that the true nemesis of OpenAI is a Chinese AI startup and that will be the case for the foreseeable future.

DeepSeek R1 is MIT licensed and it is truly “open AI”, contrary to OpenAI that is open in name only.

R1 is 90 to 95 percent cheaper than o1. So, for high usage, R1 is better than o1.

DeepSeek also released six smaller open source models distilled from R1. They have between 1.5B and 70B parameters. The performance of these distilled versions is also impressive. Take for example DeepSeek-R1-Distill-Qwen-1.5B. It is a small model, but it beats GPT-4o and Claude-3.5-Sonnet on math benchmarks (AIME 2024 and MATH-500, see image below). In the past two years, I haven’t seen anything that can condense such a high level of capability into such a small model.

One interesting conclusion from the R1 paper is that the reasoning patterns of larger models can be distilled into smaller models, leading to better performance than what is achieved through reinforcement learning on small models.

We Have No Moat

All this made me think about the now infamous leaked internal google document that said: “We Have No Moat, And Neither Does OpenAI”. That thesis now seems prescient. With DeepSeek R1, Open Source AI has now effectively caught up with proprietary models. There is no going back. There is no putting the genie back in the bottle. Expect more innovations in the following months. Expect the innovations to come at breakneck speed.

This is my first reaction. I will do a more detailed analysis of the R1 paper later.

For now, we can say with a high degree of confidence that DeepSeek’s trajectory suggests that it is the true nemesis of OpenAI. Their open-source approach and innovative strategies, even under constraints, are reshaping the AI landscape.

For the foreseeable future, DeepSeek is the competitor to watch.

Read this: